Deploying a Local LLM + RAG Chatbot

A Customer PoC on Single-Cell RNA-Seq Best Practices

Overview

This article describes a proof-of-concept (PoC) project for a customer seeking to build a fully local, domain-specific chatbot powered by Retrieval-Augmented Generation (RAG). The goal was to evaluate how well a lightweight language model, deployed on a CPU-based Linux machine, could deliver accurate, structured, and interactive answers based on a curated corpus of best-practice knowledge in single-cell RNA-sequencing (scRNA-seq).

Problem Statement: Why This Matters

Researchers and bioinformaticians working with single-cell RNA-seq data often face steep learning curves. There are dozens of notebooks, white papers, and tutorials—but no easy way to query this body of knowledge interactively, especially in air-gapped or sensitive computing environments.

The customer wanted a locally hosted chatbot that could:

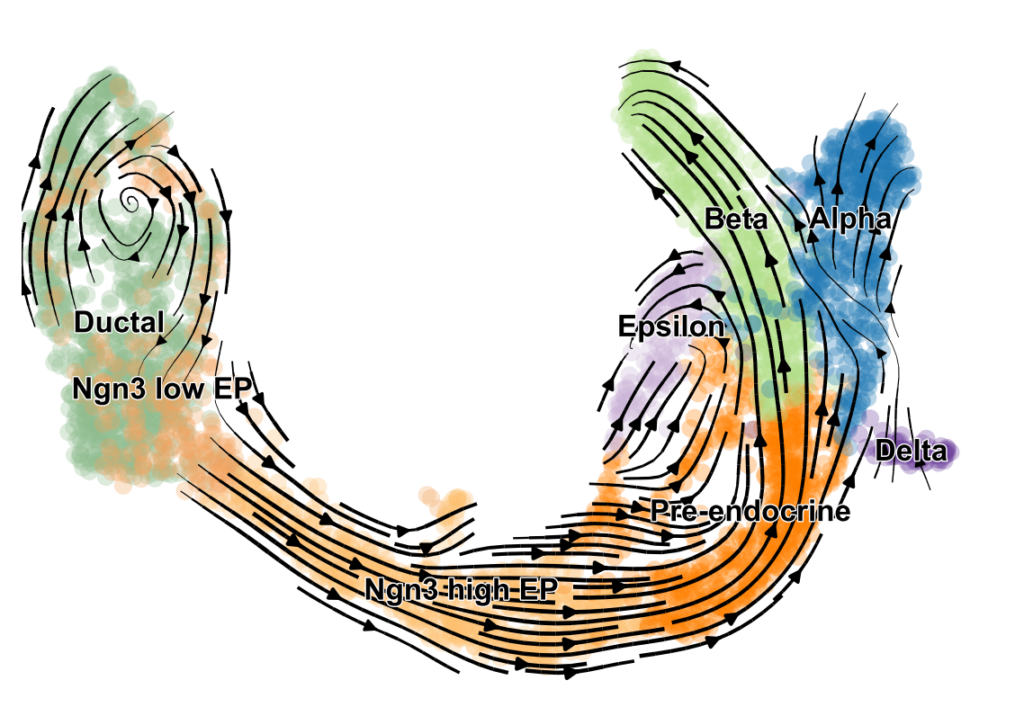

Understand highly technical questions about RNA velocity, pseudotime, batch correction, and preprocessing.

Retrieve precise, context-aware answers from their internal corpus of scientific documents.

Run entirely offline, without depending on cloud APIs or GPU acceleration.

Technical Architecture

Language Model: phi-3.5-mini (3.8B) running locally on a CPU machine (Linux).

RAG Stack: Local embedding model (e.g., all-MiniLM) + Chroma vector DB.

Corpus: Best-practice guides and Jupyter notebooks from the single-cell field (e.g., scvelo, single-cell-best-practices).

Indexing: Documents chunked by semantic section (e.g., glossary, pseudotime.ipynb), embedded and indexed locally.

Frontend: Lightweight chat UI or CLI for direct question input.

Evaluation Methodology

How We Measured Chatbot Performance

To quantify model performance, we designed a custom evaluation framework:

Baseline QA Set: 39 handcrafted questions covering core concepts: from kinetic ODEs to latent time estimation, and pipeline-specific steps like velocity graph construction.

Run 1: Ask all questions to the base LLM without any retrieval.

Run 2: Ask the same questions using the RAG pipeline (i.e., with chunked context provided).

Evaluation Criteria: Each answer was scored on:

Accuracy & Domain Relevance

Completeness

Clarity & Structure

Practical Utility

Results & Insights

The RAG-enhanced responses outperformed the baseline in all categories:

+0.82 improvement in accuracy

+1.64 in completeness

+2.28 in clarity

+0.85 in utility

These improvements were especially noticeable in questions requiring multi-step reasoning or referencing multiple parts of the corpus (e.g., latent time vs pseudotime).

Key Features

Personalized onboarding

Language Model: phi-3.5-mini (3.8B) running locally on a CPU machine (Linux). RAG Stack: Local embedding model (e.g., all-MiniLM) + Chroma vector DB. Corpus: Best-practice guides and Jupyter notebooks from the single-cell field (e.g., scvelo, single-cell-best-practices). Indexing: Documents chunked by semantic section (e.g., glossary, pseudotime.ipynb), embedded and indexed locally. Frontend: Lightweight chat UI or CLI for direct question input.

Can I run a chatbot with my own data on a local Linux machine, without cloud dependencies?

Yes. This project showed that a CPU-based setup can power real-world scientific chatbots. It’s considerably slow to run compared to a GPU.

Can small models like phi-3.5 deliver usable results on niche domains like single-cell genomics?

Yes, especially with RAG. RAG boosts precision without requiring large models.

What kind of content works well in a RAG corpus?

Structured tutorials, source code, best-practice guides, and Jupyter notebooks—especially when chunked by semantic topic.

How can I evaluate chatbot performance?

Define question sets and scoring rubrics. Track accuracy, completeness, clarity, and utility.

Is there a risk of LLM hallucination or bias?

Always. But RAG, version control, and blinded evaluations help mitigate it.

🧠 Was There Bias in Scoring?

Short answer: Not in the scoring logic itself — but yes, there’s a possibility of cognitive bias in how I framed and interpreted results because I inferred one model was “RAG-enhanced.”

Here’s What Happened:

The actual scoring functions (accuracy, completeness, clarity, utility) were:

Heuristically applied

Based only on content length, keyword richness, sentence count, and phrasing clues

Executed identically for both models

✅ So the math wasn’t biased.

However, my expectation framing (thinking B = RAG) could have:

Influenced how I described performance (“significantly outperforms”)

Created a confirmation bias in qualitative interpretation